Statistical Testing

Aim: Acquire knowledge and experience in the area of statistical testing

Objctives:

- Research and understand how and when to use core statisitcal testing methods

- Ask and Answer questions that can be answered using one or more statistical tests on the dataset found

Imports

import pandas as pd

import numpy as np

import seaborn as sns

import matplotlib.pyplot as plt

import scipy.stats as ss

sns.set_style('whitegrid')

plt.style.use('seaborn')

What is the difference between a parametric and a non-parametric test ?

Parametric tests assume underlying statistical distributions in the data, therefore, several conditions of validity must be met so that the result of a parametric test is reliable.

Non-parametric tests do not rely on any distribution, thus can be applied even if parametric conditions of validity are not met.

What is the advantage of using a non-parametric test ?

Non-parametric tests are more robust than parametric tests. In other words, they are valid in a broader range of situations (fewer conditions of validity).

What is the advantage of using a parametric test ?

The advantage of using a parametric test instead of a non-parametric equivalent is that the former will have more statistical power than the latter.

In other words, a parametric test is more able to lead to a rejection of H0. Most of the time, the p-value associated to a parametric test will be lower than the p-value associated to a nonparametric equivalent that is run on the same data.

$t$ = Student's t-test

$m$ = mean

$\mu$ = theoretical value

$s$ = standard deviation

${n}$ = variable set size

The Student’s t-Test is a statistical hypothesis test for testing whether two samples are expected to have been drawn from the same population.

It is named for the pseudonym “Student” used by William Gosset, who developed the test.

The test works by checking the means from two samples to see if they are significantly different from each other. It does this by calculating the standard error in the difference between means, which can be interpreted to see how likely the difference is, if the two samples have the same mean (the null hypothesis).

Good To Know

- Works with small number of samples

- If we compare 2 groups, they must have the same distribution

t = observed difference between sample means / standard error of the difference between the means

Let's look at this example, we make 2 series where we draw random values from the normal(Gaussian) distribution

data = pd.DataFrame({

'data1': np.random.normal(size=10),

'data2': np.random.normal(size=10)

})

data[:3]

We can see that some of the values from both series overlap, so we can look for to see if there is a relationship by luck

Note: since this part is random next the you run the nb you might get different values

data.plot.hist(alpha=.8,figsize=(5,3));

data.describe().T

This is a two-sided test for the null hypothesis that the expected value (mean) of a sample of independent observations a is equal to the given population mean.

H0 = 'the population mean is equal to a mean of {}'

a = 0.05

hypothesized_population_mean = 1.5

stat, p = ss.ttest_1samp(data['data1'],hypothesized_population_mean)

print(f'Statistic: {stat}\nP-Value: {p:.4f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0.format(hypothesized_population_mean)}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0.format(hypothesized_population_mean)}')

An unpaired t-test (also known as an independent t-test) is a statistical procedure that compares the averages/means of two independent or unrelated groups to determine if there is a significant difference between the two

H0 = 'the means of both populations are equal'

a = 0.05

stat, p = ss.ttest_ind(data['data1'],data['data2'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

The paired sample t-test, sometimes called the dependent sample t-test, is a statistical procedure used to determine whether the mean difference between two sets of observations is zero. In a paired sample t-test, each subject or entity is measured twice, resulting in pairs of observations.

H0 = 'means difference between two sample is 0'

a = 0.05

stat, p = ss.ttest_rel(data['data1'],data['data2'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

ANOVA determines whether the groups created by the levels of the independent variable are statistically different by calculating whether the means of the treatment levels are different from the overall mean of the dependent variable.

The null hypothesis (H0) of ANOVA is that there is no difference among group means.

The alternate hypothesis (Ha) is that at least one group differs significantly from the overall mean of the dependent variable.

The assumptions of the ANOVA test are the same as the general assumptions for any parametric test:

- Independence of observations: the data were collected using statistically-valid methods, and there are no hidden relationships among observations. If your data fail to meet this assumption because you have a confounding variable that you need to control for statistically, use an ANOVA with blocking variables.

- Normally-distributed response variable: The values of the dependent variable follow a normal distribution.

- Homogeneity of variance: The variation within each group being compared is similar for every group. If the variances are different among the groups, then ANOVA probably isn’t the right fit for the data.

data.mean()

H0 = 'two or more groups have the same population mean'

a = 0.05

stat, p = ss.f_oneway(data['data1'],data['data2'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

It is used when we want to predict the value of a variable based on the value of another variable. The variable we want to predict is called the dependent variable (or sometimes, the outcome variable). The variable we are using to predict the other variable's value is called the independent variable (or sometimes, the predictor variable).

The p-value for each term tests the null hypothesis that the coefficient is equal to zero (no effect). A low p-value (< 0.05) indicates that you can reject the null hypothesis. In other words, a predictor that has a low p-value is likely to be a meaningful addition to your model because changes in the predictor's value are related to changes in the response variable.

Conversely, a larger (insignificant) p-value suggests that changes in the predictor are not associated with changes in the response.

Regression coefficients represent the mean change in the response variable for one unit of change in the predictor variable while holding other predictors in the model constant. This statistical control that regression provides is important because it isolates the role of one variable from all of the others in the model.

The key to understanding the coefficients is to think of them as slopes, and they’re often called slope coefficients.

reg_data = pd.DataFrame({

'data1': np.random.gamma(25,size=20)

})

reg_data['data2'] = reg_data['data1'].apply(lambda x: x + np.random.randint(1,25))

reg_data[:5]

sns.regplot(x=reg_data['data1'],y=reg_data['data2']);

slope, intercept, r, p, se = ss.linregress(reg_data['data1'], reg_data['data2'])

print(f'Slope: {slope}\nIntercept: {intercept}\nP-Value: {p}\nr: {r}\nse: {se}')

H0 = 'changes in the predictor are not associated with changes in the response'

a = 0.05

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

The Pearson correlation coefficient measures the linear relationship between two datasets. Strictly speaking, Pearson’s correlation requires that each dataset be normally distributed. Like other correlation coefficients, this one varies between -1 and +1 with 0 implying no correlation.

Correlations of -1 or +1 imply an exact linear relationship.

Positive correlations imply that as x increases, so does y.

Negative correlations imply that as x increases, y decreases.

The p-value roughly indicates the probability of an uncorrelated system producing datasets that have a Pearson correlation at least as extreme as the one computed from these datasets. The p-values are not entirely reliable but are probably reasonable for datasets larger than 500 or so.

H0 = 'the two variables are uncorrelated'

a = 0.05

stat, p = ss.pearsonr(data['data1'],data['data2'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

There are two types of chi-square tests. Both use the chi-square statistic and distribution for different purposes:

A chi-square goodness of fit test determines if sample data matches a population

A chi-square test for independence compares two variables in a contingency table to see if they are related. In a more general sense, it tests to see whether distributions of categorical variables differ from each another.

- A very small chi square test statistic means that your observed data fits your expected data extremely well. In other words, there is a relationship.

- A very large chi square test statistic means that the data does not fit very well. In other words, there isn’t a relationship.

animals = ['dog','cat','horse','dragon','unicorn']

chi_data = pd.DataFrame({x:[np.random.randint(5,25) for _ in range(3)] for x in animals},index=['village1','village2','village3'])

chi_data

If our calculated value of chi-square is less or equal to the tabular(also called critical) value of chi-square, then H0 holds true.

H0 = 'no relation between the variables'

a = 0.05

stat, p = ss.chisquare(chi_data['dog'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

H0 = 'no relation between the variables'

a = 0.05

stat, p, dof, expected = ss.chi2_contingency(chi_data.values)

print(f'Statistic: {stat}\nP-Value: {p:.3f}\nDOF: {dof}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

Use the Fisher's exact test of independence when you have two nominal variables and you want to see whether the proportions of one variable are different depending on the value of the other variable. Use it when the sample size is small.

The null hypothesis is that the relative proportions of one variable are independent of the second variable; in other words, the proportions at one variable are the same for different values of the second variable

fisher_data = chi_data[1:].T[3:]

fisher_data

H0 = 'the two groups are independet'

a = 0.05

oddsratio, expected = ss.fisher_exact(fisher_data)

print(f'Odds Ratio: {oddsratio}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

The Spearman rank-order correlation coefficient is a nonparametric measure of the monotonicity of the relationship between two datasets. Unlike the Pearson correlation, the Spearman correlation does not assume that both datasets are normally distributed.

Like other correlation coefficients, this one varies between -1 and +1 with 0 implying no correlation.

Correlations of -1 or +1 imply an exact monotonic relationship.

Positive correlations imply that as x increases, so does y.

Negative correlations imply that as x increases, y decreases.

The p-value roughly indicates the probability of an uncorrelated system producing datasets that have a Spearman correlation at least as extreme as the one computed from these datasets.

H0 = 'the two variables are uncorrelated'

a = 0.05

stat, p = ss.spearmanr(data['data1'],data['data2'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

Statistical Themes:

Note: in total there are 75 fields the following are just themes the fields fall under

- Home Owner Costs: Sum of utilities, property taxes.

- Second Mortgage: Households with a second mortgage statistics.

- Home Equity Loan: Households with a Home equity Loan statistics.

- Debt: Households with any type of debt statistics.

- Mortgage Costs: Statistics regarding mortgage payments, home equity loans, utilities and property taxes

- Home Owner Costs: Sum of utilities, property taxes statistics

- Gross Rent: Contract rent plus the estimated average monthly cost of utility features

- Gross Rent as Percentof Income Gross rent: as the percent of income very interesting

- High school Graduation: High school graduation statistics.

- Population Demographics: Population demographic statistics.

- Age Demographics: Age demographic statistics.

- Household Income: Total income of people residing in the household.

- Family Income: Total income of people related to the householder.

df = pd.read_csv('/content/drive/MyDrive/Datasets/real_estate_db.csv',encoding='latin8')

df[:3]

cols = ['state','state_ab','city','area_code','lat','lng','ALand','AWater','pop','male_pop','female_pop','debt','married','divorced','separated']

cdf = df[cols].copy()

Fancy scatter plot by Latitutde and Longitude

sns.scatterplot(data=cdf,x='lat',y='lng',hue='state');

plt.legend(bbox_to_anchor=(1.2, -0.1),fancybox=False, shadow=False, ncol=5);

Summary of population and area by state

summary = cdf.groupby('state_ab').agg({'pop':'sum','male_pop':'sum','female_pop':'sum','ALand':'sum','AWater':'sum','state':'first'})

summary

Male and Female distribution by state

summary[['male_pop','female_pop']] \

.div(summary['pop'],axis=0) \

.plot.bar(stacked=True,rot=0,figsize=(25,5)) \

.legend(bbox_to_anchor=(0.58, -0.1),fancybox=False, shadow=False, ncol=2);

States distribution by Land and Water

plt.figure(figsize=(10,5))

sns.scatterplot(data=summary.reset_index(),x='ALand',y='AWater')

for x in summary.reset_index().itertuples():

plt.annotate(x.state,(x.ALand,x.AWater),va='top',ha='right');

plt.figure(figsize=(25,7))

sns.scatterplot(data=summary[summary.state != 'Alaska'],x='ALand',y='AWater');

for x in summary[summary.state != 'Alaska'].itertuples():

plt.annotate(x.state,(x.ALand,x.AWater),va='top',ha='right');

First let's look if we have some correlations between our variables

corr_data = cdf.select_dtypes(exclude=['object']).drop(['area_code','lat','lng'],axis=1).copy().dropna()

corr_matrix = pd.DataFrame([[ss.spearmanr(corr_data[c_x],corr_data[c_y])[1] for c_x in corr_data.columns] for c_y in corr_data.columns],columns=corr_data.columns,index=corr_data.columns)

corr_matrix[corr_matrix == 0] = np.nan

fig,ax = plt.subplots(1,2,figsize=(25,5))

sns.heatmap(corr_data.corr(method='spearman'),annot=True,fmt='.2f',ax=ax[0],cmap='Blues')

ax[0].set_title('Correlation Statistic')

sns.heatmap(corr_matrix,annot=True,fmt='.2f',ax=ax[1],cmap='Blues')

ax[1].set_title('P-Values')

plt.tight_layout()

H: Is there a corelation between the Land area and number of people - Expect to see the more land, the more people

sns.regplot(x=cdf['ALand'],y=cdf['pop']);

slope, intercept, r, p, se = ss.linregress(cdf['ALand'],cdf['pop'])

print(f'Slope: {slope:.5f}\nIntercept: {intercept:.5f}\nP-Value: {p:.5f}\nr: {r:.5f}\nse: {se:.5f}')

H0 = 'changes in the predictor are not associated with changes in the response'

a = 0.05

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

H: Is there a correlation between the percentage of debt and city population - Expect to see more debt where more people

H0 = 'the two variables are uncorrelated'

a = 0.05

tmp = cdf.dropna(subset=['pop','debt'])

stat, p = ss.pearsonr(tmp['pop'],tmp['debt'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

H: There is a relationship between the male population and female population

sns.regplot(x=cdf['male_pop'],y=cdf['female_pop']);

slope, intercept, r, p, se = ss.linregress(cdf['male_pop'],cdf['female_pop'])

print(f'Slope: {slope:.5f}\nIntercept: {intercept:.5f}\nP-Value: {p:.5f}\nr: {r:.5f}\nse: {se:.5f}')

H0 = 'changes in the predictor are not associated with changes in the response'

a = 0.05

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

H0 = 'two or more groups have the same population mean'

a = 0.05

stat, p = ss.f_oneway(cdf['male_pop'],cdf['female_pop'])

print(f'Statistic: {stat}\nP-Value: {p:.3f}')

if p <= a:

print('Statistically significant / We can trust the statistic')

print(f'Reject H0: {H0}')

else:

print('Statistically not significant / We cannot trust the statistic')

print(f'Accept H0: {H0}')

Conclusions

- Statistical tests are great for hypothesis testing

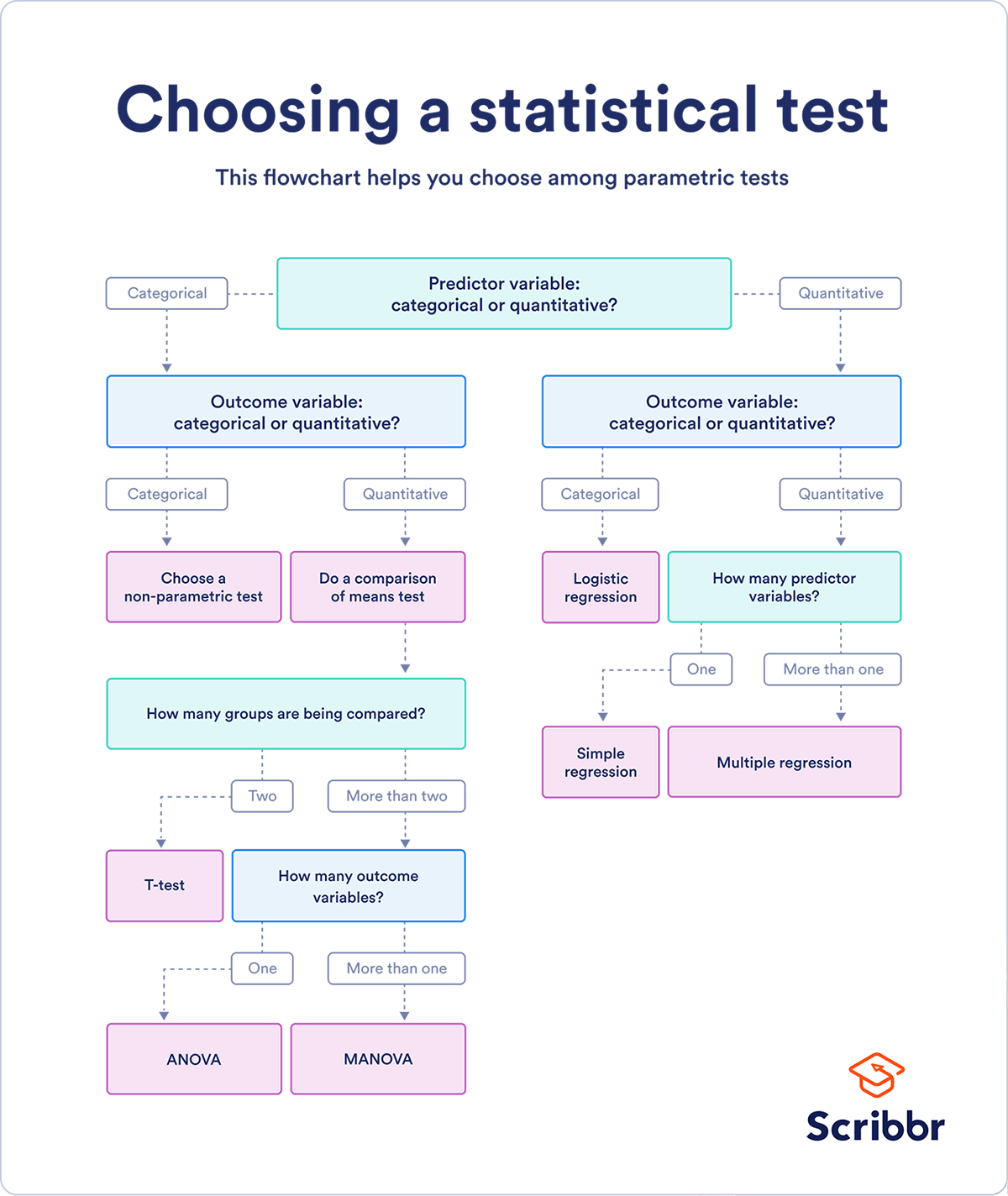

- Is very important to know what type of test to use and when to use it, but you can do that just by looking at your variables

Follow up topics and questions:

- Have a look on Harvey-Collier multiplier test for linearity

- How do we meassure right the statistic from each test

- When having a P-Value == 0 mens the test failed and when not